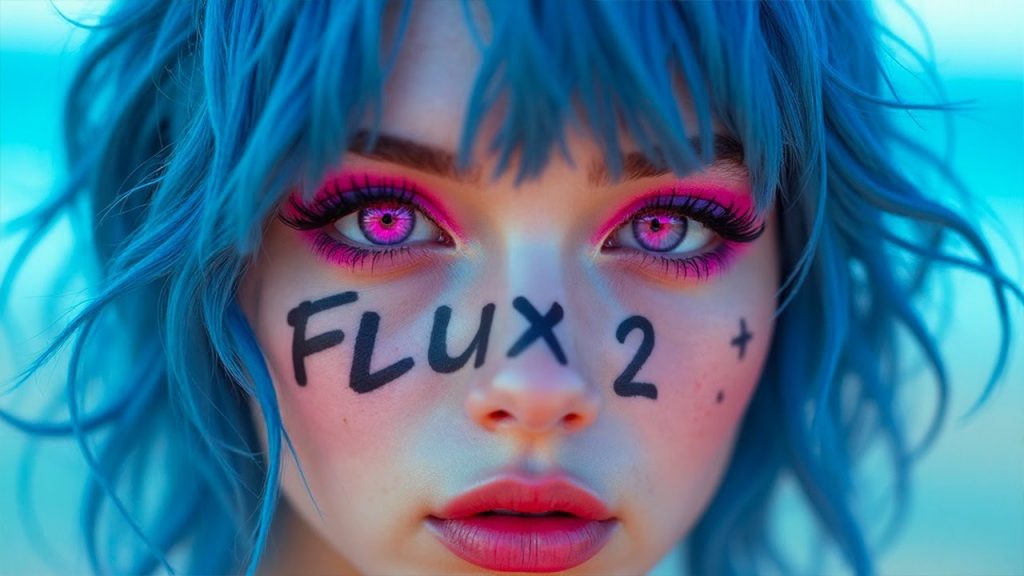

Now I’m Truly Terrified… FLUX 2 Made Reality Seem Distorted

The Launch of Flux 2 by Black Forest Labs

Black Forest Labs has recently unveiled Flux 2, a revolutionary tool that sets a new standard in the visual AI landscape. This advanced model demonstrates remarkable realism, consistent character rendering, and refined lighting, all while maintaining a cohesive style across up to ten reference images. With enhancements in text rendering, Flux 2 is capturing the attention of designers and developers alike, promising a more reliable and efficient creative process.

Key Features of Flux 2

One of the standout features of Flux 2 is its multi-reference system. Users can input multiple images, allowing for extraordinary consistency in character design, product presentation, or any style across generative tasks. This feature is especially beneficial for those producing product shots or multi-panel sequences, as it eliminates tedious prompting and reliance on separate setups, embedding consistency directly into the model’s architecture.

Architectural Innovation

Black Forest Labs has taken a fresh approach to Flux 2’s architecture. They opted not to repurpose their previous systems but instead crafted a hybrid framework. The model comprises a Mistral 324B vision language model responsible for semantic understanding, reading both texts and reference images to accurately depict how objects relate in terms of lighting, material behavior, and spatial connections.

The second component is a rectified flow transformer that manages the intricacies of image structure, including composition and visual detail. They have also created a new Variational Autoencoder (VAE) from scratch, which enhances learnability, compression, and image quality—ultimately delivering superior outputs with fewer compromises.

Variants of Flux 2

Flux 2 comes in several versions tailored to meet different needs:

- Flux 2 Pro: The flagship version designed to compete with closed systems, available in their playground and API.

- Flux 2 Flex: A customizable option allowing fine-tuning of steps, guidance scales, and performance.

- Flux 2D: An open-weight model boasting 32 billion parameters that combined text-to-image capabilities and editing.

- Flux 2 Klein: A forthcoming model that emphasizes smaller size without sacrificing performance, promising to be open-sourced under Apache 2.

All variants incorporate text-based editing and multi-reference capabilities, streamlining the workflow for users.

High Performance and Benchmarks

Flux 2 has garnered praise for its performance metrics, scoring significantly high on ELO evaluations while keeping inference costs down. In a direct comparison with Google’s Nano Banana Pro, Flux 2 delivered impressively on complex prompts, such as imaginative scenes that challenge traditional models’ spatial reasoning. This highlights Flux 2’s capability in managing relationships within images, a crucial requirement for advanced creative tasks.

The Impact of Hunyuan Video 1.5

As if the launch of Flux 2 wasn’t enough, Tencent recently introduced Hunyuan Video 1.5, an open-source AI video generator that raises the bar for video creation. This compact model, admirable in its capability, offers controlled motion, cinematic aesthetics, and frame stability that defies expectations for models of its size—just 8.3 billion parameters.

Enhanced Video Generation Features

One of the most significant limitations of open-source video solutions has been their need for massive VRAM and their ability to maintain consistency in motion and physics. Hunyuan Video 1.5 addresses these challenges efficiently, operating seamlessly on consumer GPUs. It delivers natural motion, improved instruction following, and remarkable image-to-video consistency—ensuring that initial frames maintain their integrity as the scene develops.

Available in two variants—outputting 480p or 720p—with an additional super-resolution system, it pushes video to 1080p without common interpolation artifacts. This is a game-changer in an industry that has struggled with maintaining quality across different resolutions.

Dynamic Motion and Realism

The instruction-following capabilities of this model are particularly noteworthy. Hunyuan Video 1.5 translates complex prompts into detailed camera movements, lighting adjustments, and sequential actions accurately. Whether in English or Chinese, it showcases impressive versatility when generating cinematic scenes.

Demos of the model illustrate its potential: a figure skater demonstrating stable motion, a DJ maintaining facial expressions, and even scenes with natural lighting and intricate details all present compelling evidence of its capacities.

Comparison with Leading Models

In comparisons with current leading open-source models, such as Open Sora 1.22, Hunyuan Video 1.5 has shown notable advancements. Whether executing complex camera movements in chaotic scenes or handling nuanced actions, Hunyuan consistently performs better. While both models share weaknesses in specific areas—such as character recognition—Hunyuan leads in overall instruction adherence and visual effects.

Advanced Technology Under the Hood

Hunyuan Video 1.5 utilizes an advanced architecture featuring a unified diffusion transformer paired with a 3D causal VAE codec. This design allows efficient data compression while preserving high-quality outputs. Moreover, the selective and sliding tile attention (SSTA) system optimizes spatiotemporal data, preventing high compute costs, especially during lengthy sequences.

Tencent’s rigorous training optimizations, including a multi-stage pipeline, have refined the model’s capabilities in motion coherence, visual aesthetics, and alignment with human preferences.

Accessibility and User Experience

To facilitate local use without overwhelming system resources, Hunyuan Video 1.5 integrates with Comfy UI, making it easier for users to access its features. Options like FP8 and GGUF versions allow flexibility based on hardware capabilities, with the smallest GGUF model under 5GB, enabling full image-to-video generation.

Conclusion

The rapid advancements in AI-generated visuals and videos through Flux 2 and Hunyuan Video 1.5 indicate a paradigm shift in the creative landscape. As these technologies continue to evolve, they promise to significantly alter how creators approach visual projects, from conceptualization to execution. As the open-source sector increasingly matches or even surpasses commercial tools, the future of visual AI looks exceptionally promising.

Which upgrade resonated most with you? Share your thoughts! If you found this analysis helpful, please like and subscribe for more content on the advancements in AI technology.

#Scared #FLUX #Reality #Feel #Wrong

Thanks for reaching. Please let us know your thoughts and ideas in the comment section.

Source link

Futuristic Flying Pod Stuns Future has landed ❤❤❤❤

Please pin my first comment

No.

Z Image.

On what palanet do we real, live humans give a shit about the realism of fake videos? Phuck your data center in my back yard!

Z image is better and less censored the flux 2.

Sorry I've been comparing flux 2 to Nano banana pro and I'm sorry to say but flux doesn't even come close to Nano Banana pro so far. Especially when it comes to text.

Theres a flux two already??!!!

"The realism is unreal!!"

😂