A Robot Navigates an Office Space with Google Gemini

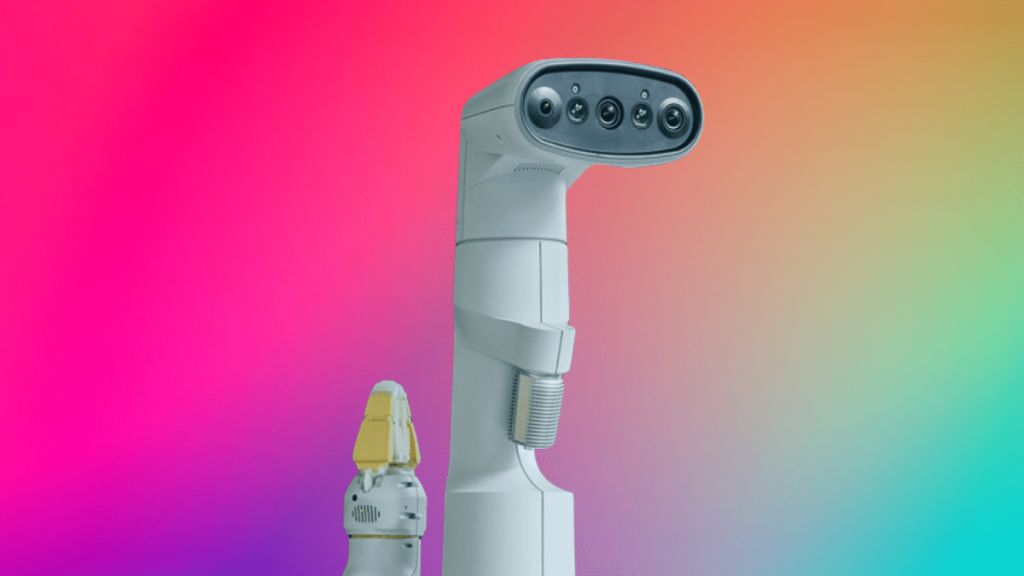

Google has unveiled a fascinating project showcasing the capabilities of its Gemini AI model combined with a robot from its now-defunct Everybody Robots Division. Despite the division’s shutdown last year, the robots remain in use. This experiment leverages Gemini AI to enable a robot to follow commands and navigate an office space, providing a glimpse into the future of AI and robotics.

Gemini AI, through the use of vision language models (VLMs), achieves remarkable feats by training on images, videos, and text. These VLMs bring perception to AI, allowing it to perform complex tasks and answer questions. This breakthrough demonstrates how advanced AI can be seamlessly integrated into practical applications, highlighting the immense potential of such technologies.

Google’s Latest Experiment

Google has showcased a new project involving their Gemini AI model and a robot from its Everybody Robots Division. Despite the division being shut down last year, the robots still exist. In this experiment, Gemini AI was used to teach one of these robots to respond to commands and navigate an office space.

Gemini AI used vision language models (VLMs) to achieve this. These VLMs are trained on images, videos, and text, allowing them to perform tasks that require perception and answer questions. This marks a significant step in the integration of AI with robotics, showcasing what modern AI can accomplish.

Robot Navigates the Office

In the first video, a Google employee asked the robot to take him somewhere to draw things. The robot responded that it needed a minute to think and then led the employee to a whiteboard. This interaction demonstrates the robot’s ability to understand and execute complex tasks using Gemini AI.

In another video, the robot is instructed to follow directions on a whiteboard map to reach the Blue Area. The robot successfully follows the directions to a robotics testing area and announces, “I’ve successfully followed the directions on the whiteboard.” These examples highlight the robot’s ability to navigate using visual and textual instructions.

The Role of Vision Language Models

Vision Language Models (VLMs) are crucial in this experiment. They facilitate the robot’s ability to comprehend and interact with its surroundings. VLMs combine visual perception with language processing, making them ideal for tasks that involve both elements. This combination is what allows the Gemini-powered robot to perform tasks like guiding an employee to a specific location.

VLMs are trained on extensive datasets of images, videos, and text. This training helps them understand context and respond accurately to commands. The success of this experiment demonstrates the potential of VLMs in enhancing AI’s capabilities across various real-world applications.

Significance of the Experiment

This experiment is significant for several reasons. First, it demonstrates the practical applications of AI in everyday tasks. By navigating an office space and following commands, the robot shows how AI can be integrated into daily operations to improve efficiency and productivity.

Second, it highlights the advancements in AI and robotics. The ability of the robot to understand and act on complex instructions shows the progress made in AI technologies. This could pave the way for more sophisticated AI applications in various fields, including healthcare, manufacturing, and customer service.

Lastly, it reinforces the importance of ongoing research and development in AI. Continued investment in AI technologies will drive further innovations and open up new possibilities for automation and intelligent systems.

Potential Challenges

Despite the success of this experiment, there are potential challenges that need to be addressed. One such challenge is ensuring the robot’s reliability and accuracy in various conditions. AI systems must be robust and capable of handling different environments and scenarios.

Another challenge is the ethical considerations surrounding AI and robotics. As AI systems become more advanced, it’s crucial to address issues related to privacy, security, and the impact on human jobs. Implementing ethical guidelines and regulatory frameworks can help mitigate these concerns.

Moreover, there’s the technical challenge of integrating AI systems with existing technologies. Ensuring seamless communication and coordination between AI and other systems is essential for their effective deployment.

Future Prospects

The success of this project opens up exciting prospects for the future. With continued advancements in AI and robotics, we can expect more sophisticated applications and innovations. These technologies could revolutionize various industries, from healthcare to manufacturing to customer service.

One potential application is in healthcare, where AI-powered robots could assist in surgeries, patient care, and administrative tasks. This could improve efficiency and accuracy in medical procedures and reduce the burden on healthcare professionals.

In the manufacturing sector, AI robots could streamline production processes, perform quality control, and manage inventories. This would enhance productivity and reduce operational costs. The possibilities are vast, and we are just beginning to scratch the surface of what AI can achieve.

Conclusion

The integration of AI and robotics is a promising field with immense potential. This experiment with Gemini AI and the robotic assistant demonstrates the progress made in this area. By overcoming challenges and continuing research and development, we can unlock new possibilities and transform various industries with advanced AI technologies.

The integration of AI and robotics is a promising field with immense potential. This experiment with Gemini AI and the robotic assistant demonstrates the progress made in this area. By overcoming challenges and continuing research and development, we can unlock new possibilities and transform various industries with advanced AI technologies.