Google’s SIMA 2 Agent Leverages Gemini for Reasoning and Action in Virtual Worlds

Image Credits:Google DeepMind

Google DeepMind Unveils SIMA 2: A Leap Toward Generalist AI

On Thursday, Google DeepMind introduced SIMA 2, a groundbreaking advancement in their generalist AI agents. This next-generation model integrates the advanced language and reasoning capabilities of Gemini, Google’s large language model, marking a significant shift from merely following instructions to genuinely understanding and interacting with its environment.

The Evolution of SIMA

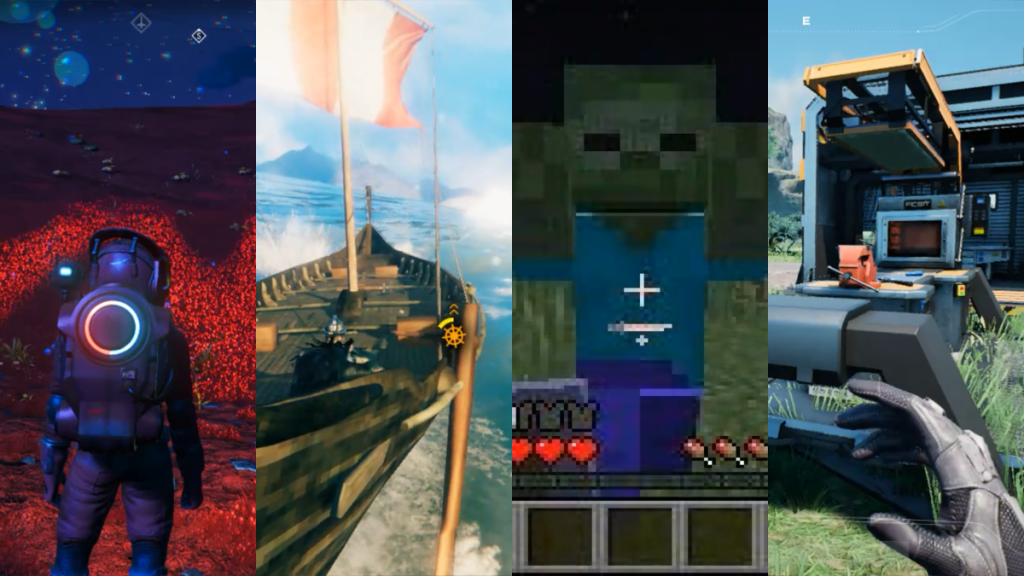

DeepMind’s approach to AI development includes rich training datasets and advanced methodologies, as seen in notable projects like AlphaFold. The original SIMA was trained using hundreds of hours of video game data to simulate human-like gameplay across various 3D environments. Unveiled in March 2024, SIMA 1 demonstrated a basic competency in following instructions, yet it struggled with complex task completion, achieving only a 31% success rate compared to 71% for human players.

Joe Marino, a senior research scientist at DeepMind, expressed that “SIMA 2 is a step change improvement over SIMA 1.” This update transforms SIMA into a more robust agent capable of tackling complex tasks in unfamiliar environments. Marino emphasized that SIMA 2’s ability to self-improve based on its experiences positions it as a significant stride toward more versatile robots and, ultimately, Artificial General Intelligence (AGI).

What Sets SIMA 2 Apart?

SIMA 2 operates on the Gemini 2.5 flash-lite model, designed for a vast array of intellectual tasks. AGI, as defined by DeepMind, refers to systems capable of learning new skills and generalizing knowledge across diverse domains.

The Importance of Embodied Agents

DeepMind places considerable emphasis on the role of “embodied agents” in developing generalized intelligence. Marino elaborated that these agents manipulate a physical or virtual world, functioning similarly to humans or robots. In contrast, non-embodied agents might simply manage calendars, take notes, or execute code.

According to Jane Wang, a senior research scientist with a neuroscience background, SIMA 2 expands its capabilities beyond gaming. “We’re demanding it to genuinely understand the situation, interpret user requests, and respond in a way that makes sense,” she remarked.

The Power of Gemini Integration

By integrating the capabilities of Gemini, SIMA 2 effectively doubles the performance of its predecessor. This amalgamation of advanced language and reasoning abilities with embodied skills acquired through training reflects a significant technological leap. An example demonstrated by Marino featured SIMA 2 in the game “No Man’s Sky,” where the agent effectively described its surroundings and engaged with a distress beacon.

In another scenario, when instructed to find the house “the color of a ripe tomato,” SIMA 2 showcased its reasoning. The agent processed that ripe tomatoes are red and thus navigated to the corresponding red house.

Additionally, the Gemini integration allows SIMA 2 to interpret and act upon emoji-based commands. For instance, a command like 🪓🌲 instructs it to chop down a tree, showcasing its flexibility and understanding.

Navigating in Photorealistic Worlds

During demonstrations, SIMA 2 proved its capability to navigate newly created photorealistic environments generated by Genie, DeepMind’s world model. The agent exhibited an impressive ability to identify and interact with common objects such as benches, trees, and butterflies.

Self-Improvement Through AI

A key feature of SIMA 2 is its self-improvement capability, enabled by Gemini. Unlike its predecessor, which relied exclusively on human gameplay for training, SIMA 2 uses this data as a base model. When introduced to new environments, another Gemini model constructs new tasks, while a separate reward model evaluates the agent’s attempts. Through these self-generated experiences, SIMA 2 learns from its mistakes, refining its performance similarly to a human learning through trial and error.

Towards General-Purpose Robotics

DeepMind aims for SIMA 2 to pave the way for general-purpose robots. Frederic Besse, a senior staff research engineer, stated that successful robotic systems require both a deep understanding of the world and reasoning. For instance, if a humanoid robot is asked to check the number of bean cans in a cupboard, it needs to grasp what beans and cupboards are and navigate to the correct location.

Besse noted that SIMA 2 focuses more on higher-level behaviors rather than basic actions, such as managing physical joints or wheels.

The Future of SIMA 2

Although DeepMind has not provided a precise timeline for integrating SIMA 2 into physical robotics systems, Besse revealed that their recently introduced robotics foundation models were trained separately from SIMA. These models can reason about the physical world and create multi-step action plans for mission completion.

While no definitive release schedule for SIMA 2 has been established, Wang conveyed that the primary goal is to share DeepMind’s advancements with the world and explore collaborations and potential applications.

Conclusion

The unveiling of SIMA 2 marks a significant leap in AI technology, integrating advanced reasoning and interaction capabilities that extend beyond earlier models. With its ability to self-improve and operate in dynamic environments, SIMA 2 could revolutionize the development of general-purpose robots, inching closer to the goal of creating intelligent systems capable of understanding and navigating the complexities of the real world. As DeepMind continues its research, the future holds exciting possibilities for the applications of SIMA 2 in various fields.

Thanks for reading. Please let us know your thoughts and ideas in the comment section down below.

Source link

#Googles #SIMA #agent #Gemini #reason #act #virtual #worlds