NVIDIA’s AI: Uncannily Realistic Movement.

The Quest for Realistic Digital Movement: How DeepMimic Cracked the Code

The holy grail of computer animation has always been to create digital characters that move and behave with the natural fluidity and nuance of real humans. Imagine a world where virtual actors are indistinguishable from their flesh-and-blood counterparts, opening up possibilities for immersive gaming, realistic simulations, and groundbreaking visual effects. This is the dream, but achieving it is a colossal challenge.

Simply copying motion capture data, recording a human running, jumping, or even reading a paper, and then transposing it to a digital character, seems straightforward. But the reality is far more complex. Motion capture data provides the “what” – a record of the movement. It doesn’t provide the “how” – the intricate web of forces, torques, and muscle activations required to drive a digital skeleton and create realistic movement.

Each virtual character has a network of joints and muscles. To truly replicate human motion, one needs to calculate the precise forces and torques exerted at every joint at every moment in time. This is a monumental computational task.

DeepMimic: Turning Motion Imitation into a Video Game

Enter DeepMimic, a groundbreaking research paper from 2018 that presented a novel approach to motion imitation. The core idea? Turn motion imitation into a game.

DeepMimic uses reinforcement learning, a technique where an AI agent learns to perform a task by trial and error. The system sets up a virtual environment where the digital character is rewarded for mimicking specific motions. This environment acts like a video game, with the character’s controller trying to maximize its score.

Each joint, angle, and point of contact in the virtual character is assigned a “score counter.” The controller then endlessly tweaks the character’s movements through countless iterations, learning to achieve the highest score by perfectly imitating the motion capture data.

The results are remarkable. DeepMimic-trained characters can convincingly replicate a wide range of human movements, from running and jumping to more complex actions. The accuracy and fluidity of the movements are visually striking, blurring the line between digital and real.

Beyond Human Movement: Versatility and Robustness

What sets DeepMimic apart is its versatility. The system isn’t limited to a single character or movement style. It can adapt to different body morphologies, allowing it to control a diverse range of digital characters.

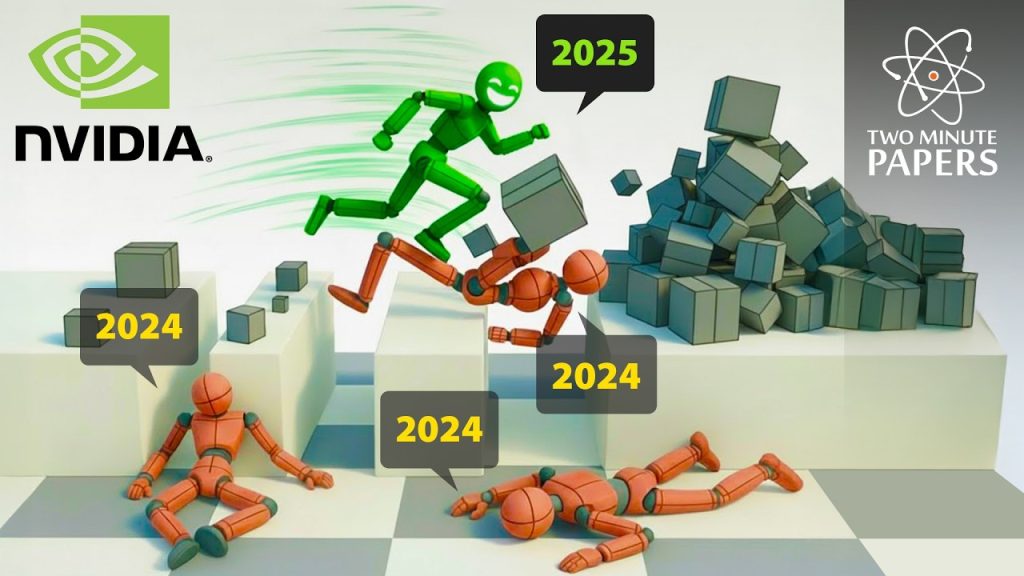

Furthermore, DeepMimic-trained characters exhibit impressive robustness. They can maintain their balance and recover from disturbances, such as being hit by virtual objects. This is crucial for creating believable characters that can interact dynamically with their environment.

The researchers even explored the creative potential of DeepMimic, demonstrating how it could be used for “art direction.” They showed how to influence the character’s performance, asking it to “dance more vigorously” and inject more “life” and “energy” into its movements. While the results might not always be aesthetically pleasing, they highlight the potential for DeepMimic to be used as a tool for creating unique and expressive digital characters. They even showcase a tired character after kung fu, showcasing that it can also mimic post-exercise behaviors.

The Future of Digital Animation

DeepMimic represents a significant step forward in the quest for realistic digital animation. It demonstrates the power of reinforcement learning to tackle complex problems in character control and motion imitation. By turning motion imitation into a game, DeepMimic has opened up new possibilities for creating lifelike digital characters that can move, interact, and behave in ways that were previously unimaginable.

While challenges remain, DeepMimic provides a glimpse into the future of digital animation, a future where virtual characters are no longer stiff, robotic imitations of humans, but dynamic, believable individuals capable of captivating audiences and pushing the boundaries of storytelling.

The ability of DeepMimic to adapt to different body types, recover from disturbances, and even be directed artistically suggests its wide applicability in diverse fields, from game development to robotic simulations. As the field of AI and reinforcement learning continues to evolve, we can expect even more sophisticated techniques to emerge, further blurring the line between the digital and the real. The future of digital characters is bright, and DeepMimic has played a pivotal role in paving the way.

#NVIDIAs #AIs #Movements #Real #Uncanny

Thanks for reaching. Please let us know your thoughts and ideas in the comment section.

Source link

I can't tell you how excited I get when I see a new Two Minute Papers video in my notifications!

9:42 Hah! He said the thing! 😁

aint no jumping here they dont pay me enough 🤣🤣🤣 i love this channel

Can't wait to be able to run this on my own computer!

Idk

I think if this tech ever makes it to games it would be more realistic if there sometimes were failure cases…that would be more humanlike and fun to experience I think

as long as it was not everytime

Love it.

LOVE IT!!!

https://www.youtube.com/watch?v=SZpQfXNDulo

For those want the ADD link

Thank you for this. It will help with our robotics work.

Have you guys realised that all these are coming from China? China is leading the world in scietific development, scientific paper production and quality, ever since the US stopped funding their universities and schools to became more preoccupied with discussions about if a woman should die because she had a miscarriage and interpreted their god's "teaching" as it being a big nono to remove the fetus or which bathroom anyone should use.

this looks like it can have immediate applications in movie and game animation

Now I wanted this to be added to all the games that I already have limb based combat.

If you want to try for youself what the AI has to do, play the game Toribash.

AI stuff is making Simulacra & Simulation more relevant by the day 😭

Held together by duct tape and the tears of PHD students is how lots of technologies start.

Wii fit tennis does better to be honest

now we only need to train it to follow arbitrary paths through arbitrary geometry and to never give up

I don’t care abt those people saying robots taking over the world, this stuff is cool asf

So the main benfit is to apply the movment to diffrent body types?

really kind.

the long awaited sequel to QWOP is here: QWEETYUIOPASDFGHJKL

The voice-over sounds like every word was recorded separately.

this has to be the most casual and fun video from this channel! And I love it 🌸

thank you for giving an audience to the amazing work by fellow researchers

THE MIMIC???

oh hell no…

"GREGORY, HAVE YOU HEARD OF AMONGUS GREGORY?"

What can this be used for ?

Toribash hacks going crazy

The first 5 (out of 6) authors of the paper don't seem to affiliated with NVIDIA, but with Simon Fraser University and Sony PlayStation.

And even the last author, while being a research scientist at NVIDIA, is still an Assistant Professor at SFU.

I don't think it's fair calling it "NVIDIA’s New AI".

What a great video with awesome commentary

love your positivity

Games need this. The better the graphics get, the more the unrealistic character movement stands out.

Its like watching close combat all terrain killer androids taking their first steps. So cute

I'm confused why you say that you don't see ADD being better at 4:43. ADD is flawlessly following the reference while DM is consistently failing to replicate the "spins".

Loving the fluid movement

Oh. "ANNUALLY". I must cleanse mine ears.

Guys I am new here are 2 minute papers usually 10 minutes long? 😆

I don't understand why it took them so long to come up with this, unless the programming is just a complete nightmare . Nor do I understand why it requires an AI judge, tho my idea of a solution could probably be referred to as one. Why couldn't they have just used the physics of the reference as a point of gauging its fitness? Each iteration of the simulation model's fitness, I mean. Like, that's what's necessary, right? Why wouldn't they just check to see if the velocity of each individual joint is matching the reference at every point in the animation? They could have used that to gauge fitness 8 years ago. They wouldn't have had to have an AI judge (unless that's exactly how the judge works).

I wonder if you can teach it or have it learn to do superhuman acrobatics and turn them into Jedi parkour ninjas without needing to change the gravity parameter. It'd also be cool if you can then pseudo lock the execution/performance of actions so they behave consistently in the style you want. That way you can tune and give personality to an agent's actions rather than have every agent move and act the same way just because their body types are the same. Obviously the general schematic for basic actions would remain the same, but personalized within those restrictions: similar to how everyone has a slightly different way of standing, walking, holding an object which reflects their personality, yet they are still bound by physical limitations. I wonder how far can this technology be pushed and how intuitive can the parameter system be made now and several papers down the line.